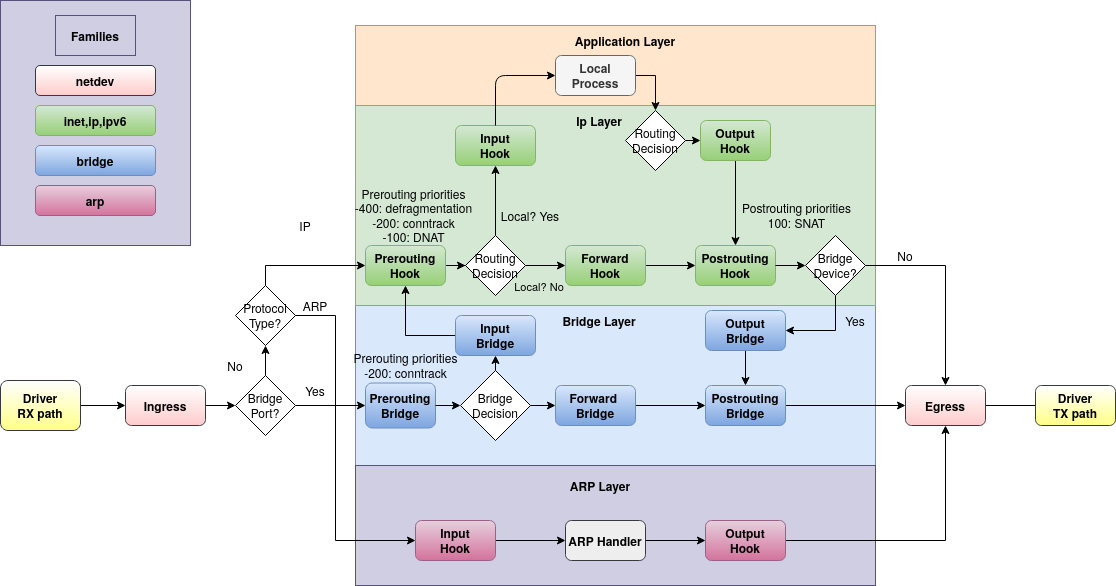

我可以看到数据包在链中被记录/接受forward,并且它们被正确地标记了正确的出站接口,但它们从未命中链中的规则postrouting,也tcpdump从未显示任何离开接口的数据包。每此图路由决策已经发生(这就是为什么它在链中显示出站接口的原因),并且在和forward之间没有进一步的决策需要做出。那么是什么过程导致数据包被丢弃?forwardpostrouting

数据包到达 上的 GRE 隧道eth1,该隧道将前往source_interface。数据包应离开名为 的 Wireguard 接口destination_interface。

$ ip addr show source_interface

14: source_interface@NONE: <POINTOPOINT,NOARP,UP,LOWER_UP> mtu 8973 qdisc noqueue state UNKNOWN group default qlen 1000

link/gre 10.1.0.219 peer 10.1.0.1

inet 10.192.122.5/31 scope global source_interface

valid_lft forever preferred_lft forever

inet6 fe80::5efe:a01:db/64 scope link

valid_lft forever preferred_lft forever

$ ip addr show destination_interface

15: destination_interface: <POINTOPOINT,NOARP,UP,LOWER_UP> mtu 1420 qdisc noqueue state UNKNOWN group default qlen 1000

link/none

inet 10.192.122.3/31 brd 10.192.122.3 scope global destination_interface

valid_lft forever preferred_lft forever

$ ip tun

source_interface: gre/ip remote 10.1.0.1 local 10.1.0.219 ttl inherit key 168143513

路由表的设置使得有一条路由将这些数据包发送出去destination_interface。这个盒子有点有趣,因为它是“线路中的凸块”,而不是第 3 层路由器。因此来自 ab0.0/16 的数据包将进入source_interface但应该出去destination_interface。你必须相信我。

$ ip route

default via 10.1.0.1 dev eth1 metric 10001

10.1.0.0/24 dev eth1 proto kernel scope link src 10.1.0.219

10.192.122.2/31 dev destination_interface proto kernel scope link src 10.192.122.3

10.192.122.4/31 dev source_interface proto kernel scope link src 10.192.122.5

a.b.0.0/16 dev destination_interface scope link

$ ip route get a.b.c.d from a.b.x.y iif source_interface

a.b.c.d from a.b.x.y dev destination_interface

cache iif source_interface

我看到数据包到达。我看到路由决策发生。但在forward和postrouting链之间,数据包消失了。

trace id f6179072 ip tablename prerouting packet: iif "source_interface" @ll,0,160 393920416270895464991677841829446786106534199515 ip saddr a.b.x.y ip daddr a.b.c.d ip dscp cs0 ip ecn not-ect ip ttl 64 ip id 29177 ip length 128 udp sport 60448 udp dport 60449 udp length 108 @th,64,96 6499185482949407548864728065

trace id f6179072 ip tablename prerouting rule iifname "source_interface" udp dport 60448-60449 counter packets 1341 bytes 118842 nftrace set 1 (verdict continue)

trace id f6179072 ip tablename prerouting verdict continue

trace id f6179072 ip tablename prerouting policy accept

trace id f6179072 ip tablename forward packet: iif "source_interface" oif "destination_interface" @ll,0,160 393920416270895464991677841829446786106534199515 ip saddr a.b.x.y ip daddr a.b.c.d ip dscp cs0 ip ecn not-ect ip ttl 63 ip id 29177 ip length 128 udp sport 60448 udp dport 60449 udp length 108 @th,64,96 6499185482949407548864728065

trace id f6179072 ip tablename forward rule udp dport 60448-60449 counter packets 930 bytes 82380 (verdict continue)

trace id f6179072 ip tablename forward verdict continue

trace id f6179072 ip tablename forward policy accept

我仔细检查了一下,发现forwarding是ip_forward打开的,而(由于线路中的碰撞特性)是关闭的。为了以防万一,rp_filter我还打开了;但什么都没有记录。log_martians

$ sysctl -a | egrep 'ipv4\.conf\.(all|default|source_interface|destination_interface)\.(forwarding|log_martians|rp_filter) |ipv4\.ip_forward '

net.ipv4.conf.all.forwarding = 1

net.ipv4.conf.all.log_martians = 1

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.default.forwarding = 1

net.ipv4.conf.default.log_martians = 1

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.source_interface.forwarding = 1

net.ipv4.conf.source_interface.log_martians = 1

net.ipv4.conf.source_interface.rp_filter = 0

net.ipv4.conf.destination_interface.forwarding = 1

net.ipv4.conf.destination_interface.log_martians = 1

net.ipv4.conf.destination_interface.rp_filter = 0

net.ipv4.ip_forward = 1

除了日志/计数器之外,nftables 中没有其他内容:

bash-4.2# nft list tables

table ip tablename

bash-4.2# nft list table tablename

table ip tablename {

chain prerouting {

type filter hook prerouting priority 0; policy accept;

iifname "source_interface" udp dport 60448-60449 counter packets 41327 bytes 3657090 nftrace set 1

}

chain postrouting {

type filter hook postrouting priority 0; policy accept;

udp dport 60448-60449 counter packets 0 bytes 0

}

chain forward {

type filter hook forward priority 0; policy accept;

udp dport 60448-60449 counter packets 40916 bytes 3620628

}

}

TCPDUMP:

$ tcpdump -c10 -i source_interface udp -lenn

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on elasticast, link-type LINUX_SLL (Linux cooked), capture size 262144 bytes

23:48:20.410079 In ethertype IPv4 (0x0800), length 144: a.b.x.y.60448 > a.b.c.d.60449: UDP, length 100

23:48:20.514049 In ethertype IPv4 (0x0800), length 144: a.b.x.y.60448 > a.b.c.d.60449: UDP, length 100

23:48:20.618069 In ethertype IPv4 (0x0800), length 144: a.b.x.y.60448 > a.b.c.d.60449: UDP, length 100

23:48:20.722078 In ethertype IPv4 (0x0800), length 144: a.b.x.y.60448 > a.b.c.d.60449: UDP, length 100

23:48:20.826039 In ethertype IPv4 (0x0800), length 144: a.b.x.y.60448 > a.b.c.d.60449: UDP, length 100

23:48:20.930021 In ethertype IPv4 (0x0800), length 144: a.b.x.y.60448 > a.b.c.d.60449: UDP, length 100

23:48:21.034045 In ethertype IPv4 (0x0800), length 144: a.b.x.y.60448 > a.b.c.d.60449: UDP, length 100

23:48:21.138037 In ethertype IPv4 (0x0800), length 144: a.b.x.y.60448 > a.b.c.d.60449: UDP, length 100

23:48:21.242025 In ethertype IPv4 (0x0800), length 144: a.b.x.y.60448 > a.b.c.d.60449: UDP, length 100

23:48:21.346089 In ethertype IPv4 (0x0800), length 144: a.b.x.y.60448 > a.b.c.d.60449: UDP, length 100

10 packets captured

10 packets received by filter

0 packets dropped by kernel

$ timeout 30 tcpdump -c10 -i destination_interface udp -lenn

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on wg0, link-type RAW (Raw IP), capture size 262144 bytes

0 packets captured

0 packets received by filter

0 packets dropped by kernel

我可以到达 WireGuard 隧道的另一端:

$ ip route get 10.192.122.2

10.192.122.2 dev destination_interface src 10.192.122.3 uid 0

cache

bash-4.2# ping -c2 10.192.122.2

PING 10.192.122.2 (10.192.122.2) 56(84) bytes of data.

64 bytes from 10.192.122.2: icmp_seq=1 ttl=64 time=19.6 ms

64 bytes from 10.192.122.2: icmp_seq=2 ttl=64 time=0.412 ms

--- 10.192.122.2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.412/10.043/19.674/9.631 ms

为了减少变量,我已路由了另一个网络,因此反向路径过滤不再是一个因素。现在有一条额外的w.x.y.0/24via路由source_interface,流量来自w.x.y.1到a.b.c.d:

$ ip route show

w.x.y.0/24 dev source_interface scope link

10.192.122.2/31 dev destination_interface proto kernel scope link src 10.192.122.3

10.192.122.4/31 dev source_interface proto kernel scope link src 10.192.122.5

a.b.0.0/16 via 10.192.122.2 dev wg0

$ ip route get a.b.c.d from w.x.y.1 iif source_interface

a.b.c.d from w.x.y.1 via 10.192.122.2 dev destination_interface

cache iif source_interface

我在中看到了同样的行为nft monitor:

trace id 1115829d ip tablename prerouting packet: iif "source_interface" @ll,0,160 393920389728870845158477693125073444177958731995 ip saddr w.x.y.1 ip daddr a.b.c.d ip dscp cs0 ip ecn not-ect ip ttl 64 ip id 97 ip length 50 udp sport 60448 udp dport 60449 udp length 30 @th,64,96 6055826848460663089609086

trace id 1115829d ip tablename prerouting rule iifname "source_interface" udp dport 60448-60449 counter packets 174010 bytes 15393938 nftrace set 1 (verdict continue)

trace id 1115829d ip tablename prerouting verdict continue

trace id 1115829d ip tablename prerouting policy accept

trace id 1115829d ip tablename forward packet: iif "source_interface" oif "destination_interface" @ll,0,160 393920389728870845158477693125073444177958731995 ip saddr w.x.y.1 ip daddr a.b.c.d ip dscp cs0 ip ecn not-ect ip ttl 63 ip id 97 ip length 50 udp sport 60448 udp dport 60449 udp length 30 @th,64,96 6055826848460663089609086

trace id 1115829d ip tablename forward rule udp dport 60448-60449 counter packets 173045 bytes 15286564 (verdict continue)

trace id 1115829d ip tablename forward verdict continue

trace id 1115829d ip tablename forward policy accept

我打开/sys/kernel/debug/tracing/events/fib/enable后看到以下内容,看起来是正确的。它在本地路由表 (255) 中找不到任何路由,但它在主路由表 (254) 中正确地找到了正向和反向路由。

<idle>-0 [002] ..s. 12852.684790: fib_table_lookup: table 255 oif 0 iif 0 proto 0 0.0.0.0/0 -> a.b.c.d/0 tos 0 scope 0 flags 0 ==> dev - gw 0.0.0.0/:: err -11

<idle>-0 [002] ..s. 12852.684793: fib_table_lookup: table 255 oif 0 iif 14 proto 0 w.x.y.1/0 -> a.b.c.d/0 tos 0 scope 0 flags 0 ==> dev - gw 0.0.0.0/:: err -11

<idle>-0 [002] ..s. 12852.684793: fib_table_lookup: table 254 oif 0 iif 14 proto 0 w.x.y.1/0 -> a.b.c.d/0 tos 0 scope 0 flags 0 ==> dev destination_interface gw 10.192.122.2/:: err 0

<idle>-0 [002] ..s. 12852.684794: fib_table_lookup: table 255 oif 0 iif 15 proto 0 a.b.c.d/0 -> w.x.y.1/0 tos 0 scope 0 flags 0 ==> dev - gw 0.0.0.0/:: err -11

<idle>-0 [002] ..s. 12852.684794: fib_table_lookup: table 254 oif 0 iif 15 proto 0 a.b.c.d/0 -> w.x.y.1/0 tos 0 scope 0 flags 0 ==> dev source_interface gw 0.0.0.0/:: err 0

14: source_interface@NONE: <POINTOPOINT,NOARP,UP,LOWER_UP> mtu 8973 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/gre 10.1.0.219 peer 10.1.0.1

15: destination_interface: <POINTOPOINT,NOARP,UP,LOWER_UP> mtu 1420 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/none