我尝试将新节点加入到 Kubernetes 集群:

kubeadm join 172.29.217.209:6443 --token jew814.e5iofd6qdz26d9q --discovery-token-ca-cert-hash sha256:21919c93d652f17c6207ddfc86660bdf2f652d34b3c2a7fca6b056bcaca

显示如下错误:

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[kubelet-check] Initial timeout of 40s passed.

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

error execution phase kubelet-start: timed out waiting for the condition

To see the stack trace of this error execute with --v=5 or higher

然后我检查 kubelet v1.28.3 日志:

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.859742 2034 server.go:467] "Kubelet version" kubeletVersion="v1.28.3"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.859809 2034 server.go:469] "Golang settings" GOGC="" GOMAXPROCS="" GOTRACEBACK=""

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.860077 2034 server.go:630] "Standalone mode, no API client"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.876929 2034 server.go:518] "No api server defined - no events will be sent to API server"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.876952 2034 server.go:725] "--cgroups-per-qos enabled, but --cgroup-root was not specified. defaulting to /"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.877119 2034 container_manager_linux.go:265] "Container manager verified user specified cgroup-root exists" cgroupRoot=[]

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.877260 2034 container_manager_linux.go:270] "Creating Container Manager object based on Node Config" nodeConfig={"RuntimeCgroupsName":"","SystemCgroupsName":"","KubeletCgroupsName":">

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.877280 2034 topology_manager.go:138] "Creating topology manager with none policy"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.877289 2034 container_manager_linux.go:301] "Creating device plugin manager"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.877377 2034 state_mem.go:36] "Initialized new in-memory state store"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.877438 2034 kubelet.go:399] "Kubelet is running in standalone mode, will skip API server sync"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.877860 2034 kuberuntime_manager.go:257] "Container runtime initialized" containerRuntime="containerd" version="1.6.20~ds1" apiVersion="v1"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.878030 2034 volume_host.go:74] "KubeClient is nil. Skip initialization of CSIDriverLister"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: W1107 22:50:19.878183 2034 csi_plugin.go:189] kubernetes.io/csi: kubeclient not set, assuming standalone kubelet

Nov 07 22:50:19 k8sslave01 kubelet[2034]: W1107 22:50:19.878201 2034 csi_plugin.go:266] Skipping CSINode initialization, kubelet running in standalone mode

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.878420 2034 server.go:1232] "Started kubelet"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.878556 2034 kubelet.go:1579] "No API server defined - no node status update will be sent"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.878828 2034 server.go:194] "Starting to listen read-only" address="0.0.0.0" port=10255

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.879608 2034 fs_resource_analyzer.go:67] "Starting FS ResourceAnalyzer"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.879895 2034 server.go:162] "Starting to listen" address="0.0.0.0" port=10250

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.880142 2034 ratelimit.go:65] "Setting rate limiting for podresources endpoint" qps=100 burstTokens=10

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.880608 2034 server.go:462] "Adding debug handlers to kubelet server"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.881041 2034 server.go:233] "Starting to serve the podresources API" endpoint="unix:/var/lib/kubelet/pod-resources/kubelet.sock"

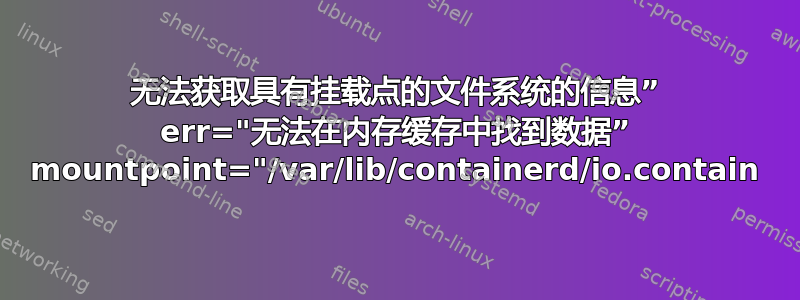

Nov 07 22:50:19 k8sslave01 kubelet[2034]: E1107 22:50:19.881520 2034 cri_stats_provider.go:448] "Failed to get the info of the filesystem with mountpoint" err="unable to find data in memory cache" mountpoint="/var/lib/containerd/io.contain>

Nov 07 22:50:19 k8sslave01 kubelet[2034]: E1107 22:50:19.881621 2034 kubelet.go:1431] "Image garbage collection failed once. Stats initialization may not have completed yet" err="invalid capacity 0 on image filesystem"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.881707 2034 volume_manager.go:291] "Starting Kubelet Volume Manager"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.883565 2034 desired_state_of_world_populator.go:151] "Desired state populator starts to run"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.883746 2034 reconciler_new.go:29] "Reconciler: start to sync state"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.901138 2034 kubelet_network_linux.go:50] "Initialized iptables rules." protocol="IPv4"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.905302 2034 kubelet_network_linux.go:50] "Initialized iptables rules." protocol="IPv6"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.905530 2034 status_manager.go:213] "Kubernetes client is nil, not starting status manager"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.905614 2034 kubelet.go:2303] "Starting kubelet main sync loop"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: E1107 22:50:19.905713 2034 kubelet.go:2327] "Skipping pod synchronization" err="[container runtime status check may not have completed yet, PLEG is not healthy: pleg has yet to be successful]"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.906704 2034 cpu_manager.go:214] "Starting CPU manager" policy="none"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.906721 2034 cpu_manager.go:215] "Reconciling" reconcilePeriod="10s"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.906739 2034 state_mem.go:36] "Initialized new in-memory state store"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.908908 2034 policy_none.go:49] "None policy: Start"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.909341 2034 memory_manager.go:169] "Starting memorymanager" policy="None"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.909360 2034 state_mem.go:35] "Initializing new in-memory state store"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.911409 2034 manager.go:471] "Failed to read data from checkpoint" checkpoint="kubelet_internal_checkpoint" err="checkpoint is not found"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.911541 2034 plugin_manager.go:118] "Starting Kubelet Plugin Manager"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.984679 2034 desired_state_of_world_populator.go:159] "Finished populating initial desired state of world"

我该怎么做才能解决这个问题?这是主节点信息:

[root@k8smasterone ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smasterone Ready control-plane 2y96d v1.28.1

这是 kubelet 状态信息:

root@k8sslave01:~# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; preset: enabled)

Active: active (running) since Tue 2023-11-07 22:50:19 CST; 12min ago

Docs: https://kubernetes.io/docs/

Main PID: 2034 (kubelet)

Tasks: 10 (limit: 2025)

Memory: 38.0M

CPU: 3.959s

CGroup: /system.slice/kubelet.service

└─2034 /usr/bin/kubelet

Nov 07 22:50:19 k8sslave01 kubelet[2034]: E1107 22:50:19.905713 2034 kubelet.go:2327] "Skipping pod synchronization" err="[container runtime status check may not have completed yet, PLEG is not healthy: pleg has yet to be successful]"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.906704 2034 cpu_manager.go:214] "Starting CPU manager" policy="none"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.906721 2034 cpu_manager.go:215] "Reconciling" reconcilePeriod="10s"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.906739 2034 state_mem.go:36] "Initialized new in-memory state store"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.908908 2034 policy_none.go:49] "None policy: Start"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.909341 2034 memory_manager.go:169] "Starting memorymanager" policy="None"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.909360 2034 state_mem.go:35] "Initializing new in-memory state store"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.911409 2034 manager.go:471] "Failed to read data from checkpoint" checkpoint="kubelet_internal_checkpoint" err="checkpoint is not found"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.911541 2034 plugin_manager.go:118] "Starting Kubelet Plugin Manager"

Nov 07 22:50:19 k8sslave01 kubelet[2034]: I1107 22:50:19.984679 2034 desired_state_of_world_populator.go:159] "Finished populating initial desired state of world"

这是 kubeadm join 详细输出:

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[kubelet-check] Initial timeout of 40s passed.

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

timed out waiting for the condition

error execution phase kubelet-start

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run.func1

cmd/kubeadm/app/cmd/phases/workflow/runner.go:260

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).visitAll

cmd/kubeadm/app/cmd/phases/workflow/runner.go:446

k8s.io/kubernetes/cmd/kubeadm/app/cmd/phases/workflow.(*Runner).Run

cmd/kubeadm/app/cmd/phases/workflow/runner.go:232

k8s.io/kubernetes/cmd/kubeadm/app/cmd.newCmdJoin.func1

cmd/kubeadm/app/cmd/join.go:179

github.com/spf13/cobra.(*Command).execute

vendor/github.com/spf13/cobra/command.go:940

github.com/spf13/cobra.(*Command).ExecuteC

vendor/github.com/spf13/cobra/command.go:1068

github.com/spf13/cobra.(*Command).Execute

vendor/github.com/spf13/cobra/command.go:992

k8s.io/kubernetes/cmd/kubeadm/app.Run

cmd/kubeadm/app/kubeadm.go:50

main.main

cmd/kubeadm/kubeadm.go:25

runtime.main

/usr/local/go/src/runtime/proc.go:250

runtime.goexit

/usr/local/go/src/runtime/asm_amd64.s:1598