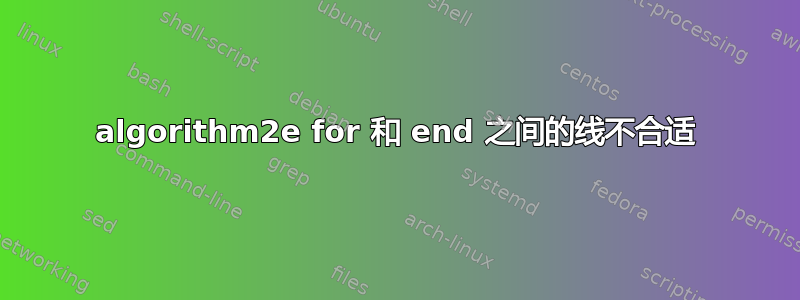

有谁知道为什么该线与第一端不匹配,而是与第二端匹配?

\documentclass{article}

\usepackage{mathrsfs,amsmath,bm,amsfonts}

\usepackage[ruled,vlined]{algorithm2e}

\begin{document}

\SetAlFnt{\small}

\begin{algorithm} \label{DENalg}

\SetAlgoLined

\caption{Algorithm for DENs}

\BlankLine

\For{number of tasks $t=1,...,T$}{

\eIf{$t=1$}{

train weights $\boldsymbol{W}^{t=1}$ in layer $\ell \in L$ with

\begin{align} \label{DENfirsttask}

\underset{\boldsymbol{W}^{t=1}}{\text{minimize}} \ \mathcal{L}(\boldsymbol{W}^{t=1}, \mathcal{D}_t)+\mu\sum_{l=1}^L \| W_l^{t=1} \| _1

\end{align}

}{

selectively retrain $\boldsymbol{W}^{t-1}$ with

\begin{align} \label{selecretrain}

\underset{\boldsymbol{W}_{L,t}^{t}}{\text{minimize}} \ \mathcal{L}(\boldsymbol{W}_{L,t}^{t}; \boldsymbol{W}_{1:L-1}^{t-1}, \mathcal{D}_t)+\mu \| W_{L,t}^{t} \| _1

\end{align}

\If{$w_{i o_t} \neq 0$}{

add $i$ to selected subnetwork $S$

}

\For{$l=L-1,...,1$}{

\If{neuron $j \in S$ exists such that $\boldsymbol{W}_{l,ij}^{t-1} \neq 0$}{

add neuron $i$ to $S$}

}

obtain $\boldsymbol{W}_S^t$ with

\begin{align} \label{minW_S}

\underset{\boldsymbol{W}_{S}^{t}}{\text{minimize}} \ \mathcal{L}(\boldsymbol{W}_{S}^{t}; \boldsymbol{W}_{S}^{t-1}, \mathcal{D}_t)+\mu \| W_{S}^{t} \| _2

\end{align}

\If{loss $\mathcal{L}_t >$ threshold \tau}{

add $k$ neurons $\mathbf{h}^{\mathcal{N}}$ at all layers and obtain $\boldsymbol{W}_l^{\mathcal{N}}$ with

\begin{align} \label{l1l2onS}

\underset{\boldsymbol{W}_{l}^{\mathcal{N}}}{\text{minimize}} \ \mathcal{L}(\boldsymbol{W}_l^{\mathcal{N}}; {\boldsymbol{W}_l^{t-1}} \mathcal{D}_t) + \mu \| \boldsymbol{W}_l^{\mathcal{N}} \|_1

\nonumber \\ + \gamma \sum_g \| \boldsymbol{W}_{l,g}^{\mathcal{N}} \|_{2}

\end{align}

}

\For{$l=L-1,...,1$}{

\If{$W_{S}^t = 0$}{remove neurons $\mathbf{h}_l^{\mathcal{N}}$}}

obtain $\widetilde{\boldsymbol{W}}^t$ with

\begin{align} \label{lastDEN}

\underset{\boldsymbol{W}^t}{\text{minimize}} \ \mathcal{L}(\boldsymbol{W}^t; \mathcal{D}_t) + \lambda \| \boldsymbol{W}^t - \boldsymbol{W}^{t-1} \|_2^2

\end{align}

\For{all hidden units $i$}{

calculate extent of catastrophic forgetting with

\begin{align} \label{extentCF}

\rho_i^{t} = \| w_{i}^{t} - w_{i}^{t-1} \|_2

\end{align}

\If{$\rho_i^{t} >$ threshold \sigma}{

copy $i$ into new $i'$

}

}

obtain $\boldsymbol{W}^t$ with initial $\widetilde{\boldsymbol{W}}^t$ using \eqref{lastDEN}

}

}

\end{algorithm}

\end{document}

它打印了一个很长的算法,但唯一没有按希望打印的部分是:

有人知道哪里出了问题吗?或者之前括号就存在问题?

我的伪代码大约有 50 行,这会是个问题吗?

谢谢

答案1

为您修复了相当多的错误,并使用\equation而不是\align。

\documentclass{article}

\usepackage{mathrsfs,amsmath,bm,amsfonts}

\usepackage[ruled,vlined]{algorithm2e}

\begin{document}

\SetAlFnt{\footnotesize}

\begin{algorithm} \label{DENalg}

\SetAlgoLined

\caption{Algorithm for DENs}

\BlankLine

\For{number of tasks $t=1,...,T$}{

\eIf{$t=1$}{

train weights $\boldsymbol{W}^{t=1}$ in layer $\ell \in L$ with

\begin{equation} \label{DENfirsttask}

\underset{\boldsymbol{W}^{t=1}}{\text{minimize}} \ \mathcal{L}(\boldsymbol{W}^{t=1}, \mathcal{D}_t)+\mu\sum_{l=1}^L \| W_l^{t=1} \| _1

\end{equation}

}{

selectively retrain $\boldsymbol{W}^{t-1}$ with

\begin{equation}\label{selecretrain}

\underset{\boldsymbol{W}_{L,t}^{t}}{\text{minimize}} \ \mathcal{L}(\boldsymbol{W}_{L,t}^{t}; \boldsymbol{W}_{1:L-1}^{t-1}, \mathcal{D}_t)+\mu \| W_{L,t}^{t} \| _1

\end{equation}

\If{$w_{i o_t} \neq 0$}{

add $i$ to selected subnetwork $S$\strut

}

\For{$l=L-1,...,1$}{

\If{neuron $j \in S$ exists such that $\boldsymbol{W}_{l,ij}^{t-1} \neq 0$}{

add neuron $i$ to $S$\strut

}

}

obtain $\boldsymbol{W}_S^t$ with

\begin{equation} \label{minW_S}

\underset{\boldsymbol{W}_{S}^{t}}{\text{minimize}} \ \mathcal{L}(\boldsymbol{W}_{S}^{t}; \boldsymbol{W}_{S}^{t-1}, \mathcal{D}_t)+\mu \| W_{S}^{t} \| _2

\end{equation}

\If{$\text{loss} \: \mathcal{L}_t > \text{threshold} \: \tau$}{

add $k$ neurons $\mathbf{h}^{\mathcal{N}}$ at all layers and obtain $\boldsymbol{W}_l^{\mathcal{N}}$ with

\begin{equation}

\label{l1l2onS}

\underset{\boldsymbol{W}_{l}^{\mathcal{N}}}{\text{minimize}} \

\mathcal{L}(\boldsymbol{W}_l^{\mathcal{N}}; {\boldsymbol{W}_l^{t-1}}

\mathcal{D}_t) + \mu \| \boldsymbol{W}_l^{\mathcal{N}} \|_1

+ \gamma \sum_g \| \boldsymbol{W}_{l,g}^{\mathcal{N}} \|_2

\end{equation}

}

\For{$l=L-1,...,1$}{

\If{$W_{S}^t = 0$}{remove neurons $\mathbf{h}_l^{\mathcal{N}}$}

}

obtain $\widetilde{\boldsymbol{W}}^t$ with

\begin{equation} \label{lastDEN}

\underset{\boldsymbol{W}^t}{\text{minimize}} \ \mathcal{L}(\boldsymbol{W}^t; \mathcal{D}_t) + \lambda \| \boldsymbol{W}^t - \boldsymbol{W}^{t-1} \|_2^2

\end{equation}

\For{all hidden units $i$}{

calculate extent of catastrophic forgetting with

\begin{equation} \label{extentCF}

\rho_i^{t} = \| w_{i}^{t} - w_{i}^{t-1} \|_2

\end{equation}

\If{$\rho_i^{t} > \text{threshold} \: \sigma$}{

copy $i$ into new $i'$

}

}

obtain $\boldsymbol{W}^t$ with initial $\widetilde{\boldsymbol{W}}^t$ using \eqref{lastDEN}

}

}

\end{algorithm}

\end{document}

关键似乎是在间距不正确时 在\If或之前添加一个空行。\For

如果您确实想拆分长方程,那么请split在其中使用环境。